Difference between GPT-1, GPT-2, GPT-3/3.5 and GPT-4

GPT is a term that have been used some long time ago in the AI (Artificial Intelligence) field, but it only becomes more popular due to the latest AI conversational chatbot that took the internet by storm! — ChatGPT.

Read on: Where does ChatGPT get its data from?

Read on: Does ChatGPT use the internet?

What is GPT?

GPT stands for “Generative Pre-trained Transformer” and it is a type of artificial intelligence (AI) language model.

It’s like a smart computer program that has been trained on a huge amount of text and code.

GPT models can do cool things like generating text, translating languages, writing creative content, and answering questions in a helpful way.

What is GPT used for?

GPT, as explained before, is used for generating contents, translating languages, summarizing text or research papers, answering questions and others to name a few.

ChatGPT as an example is an AI chatbot based on the GPT model. It is a pre-trained GPT model that has been fine-tuned on a large dataset of conversational data to generate human-like responses in natural language conversations.

Here are some of the application or uses of GPT:

- Generation of content – GPT models can generate text for various purposes, such as writing articles, blog posts, product descriptions, or social media content. They can assist in creating content quickly and efficiently.

- Translation of Language – GPT models can be used to translate text from one language to another. They learn patterns and structures in multiple languages during training, enabling them to provide translation services.

- Answering question – GPT models can answer questions posed in natural language. They can process the question and generate relevant and informative responses, making them useful for chatbots, customer support systems, or information retrieval.

- Summarizing texts – GPT models can generate summaries of longer text documents. This can be particularly useful for news articles, research papers, or lengthy documents where concise summaries are required.

- Dialogue systems and chatbots – GPT models like ChatGPT can power chatbots and dialogue systems, enabling interactive and engaging conversations with users. They can understand user queries, provide relevant responses, and assist with various tasks.

- Creative writing – GPT models have been used to generate creative content, such as poems, stories, or scripts. They can mimic the style and structure of different genres, providing assistance to writers or generating content for entertainment purposes.

- Language modeling research – GPT models are also used in research to advance the field of natural language processing and language understanding. They serve as benchmarks for evaluating model performance and exploring new techniques.

GPT Versions: GPT-1, GPT-2, GPT-3/3.5 and GPT-4

There are four main versions of GPT, which are:

- GPT-1

- GPT-2

- GPT-3/GPT-3.5

- GPT-4

1/ GPT-1

GPT-1 is a generative pre-trained transformer model developed by OpenAI. It was released in 2018 and had 117 million parameters (like settings or features).

GPT-1 was trained on a huge amount of text which was scraped from the web (or internet), about 600 billion words.

With GPT-1, you could ask it questions, and it could generate new text and even translate languages. But compared to newer versions like GPT-2 and GPT-3, GPT-1 wasn’t as good at these things.

Sometimes, it would repeat itself too much, and it wasn’t very good at understanding long pieces of text.

GPT-1 was a significant achievement in natural language processing (NLP), but it was quickly surpassed by later models.

NLP is a technology that helps computers understand and use human language.

It helps computers perform tasks such as recognizing speech, translating languages, analyzing sentiment, and answering questions.

Even though GPT-1 wasn’t the most advanced model, it was an important step in developing better computer programs for understanding and using human language. It helped researchers learn and make improvements, leading to newer and more powerful models like GPT-2, GPT-3 and currently GPT-4.

2/ GPT-2

GPT-2 is also generative pre-trained transformer model developed by OpenAI, released in 2019. It has 1.5 billion parameters, which makes it better than GPT-1.

GPT-2 was trained on a dataset of text and code scraped from the internet, just as data is being scrapped from the web to make GPT-1.

With GPT-2, you can ask it questions, and it can generate new text, translate languages, and even write creative things like stories. It’s like having a smart assistant that can help you with different tasks.

GPT-2 however, sometimes might make mistakes and produce text that doesn’t make much sense or repeats itself too much.

Even though GPT-2 is still being worked on, it already has a lot of potential for many useful things. It can be used by researchers, teachers, and even for fun.

For example, GPT-2 can help create chatbots that have realistic conversations, write interesting stories, or translate languages. It’s an exciting tool that can do a lot with language!

3/ GPT-3 / GPT-3.5

GPT-3 is an advanced language model developed by OpenAI. It’s like a smart chatbot that can have conversations and generate human-like text.

It was released in 2020, and it had a massive 175 billion parameters and was a huge improvement over GPT-2. Furthermore, it could generate even more realistic and complex text. It could also do many tasks without needing extra training.

So it can understand and respond to a wide range of prompts and questions. For example, GPT-3 can summarize factual information or even create stories.

GPT-3 is a powerful AI tool, but it is not without its limitations. It can sometimes generate text that is repetitive or nonsensical, and it can be biased or inaccurate. It is important to use GPT-3 responsibly and to be aware of its limitations.

GPT-3 offers many benefits -

- It generates text that feels human-like and engaging.

- It can be used for a wide range of tasks, including creative content generation and language translation.

- Furthermore, it’s a constantly evolving model, so it keeps getting better over time.

However, there are also some limitations to keep in mind -

- Sometimes, GPT-3 may generate repetitive or nonsensical text.

- It can also exhibit biases or inaccuracies in its responses.

- Responsible usage of GPT-3 is crucial, along with being aware of its limitations.

GPT-3.5

GPT-3.5 is a slight update to the GPT-3 model that was released in 2022. While it retains the same number of parameters as GPT-3, it benefits from being trained on a larger dataset of text and code.

This expanded training data helps GPT-3.5 generate text that is even more realistic and complex compared to its predecessor.

Additionally, GPT-3.5 exhibits enhanced capabilities in performing various tasks without requiring additional training.

Although GPT-3.5 is not considered a major upgrade over GPT-3, it still represents a significant improvement in terms of its performance and potential usefulness.

GPT-3.5 is the GPT model that ChatGPT is modelled on.

4/ GPT-4

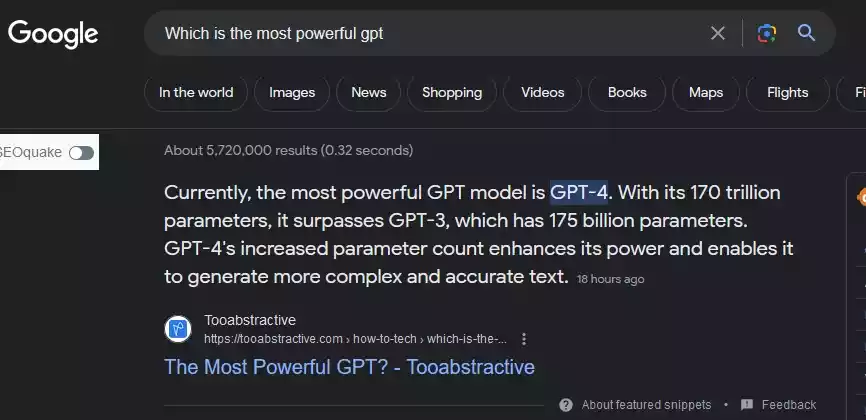

GPT-4 is the latest version of the GPT series, released in the early 2023. It is a highly advanced language model with an estimated 100 trillion parameters, making it even more powerful than its predecessor, GPT-3 and GPT-3.5.

Initially, it was made available in a limited form through ChatGPT Plus, which is a premium version of the ChatGPT.

GPT-4 is designed to generate text that is even more realistic and complex. It has undergone significant improvements, allowing it to perform a wide range of tasks without the need for additional training.

One notable enhancement in GPT-4 is that it is a multimodal model, meaning it can process both images and text. This allows it to describe humor in unusual images, summarize text from screenshots, and even answer exam questions that contain diagrams.

As GPT-4 continues to evolve and improve, it is expected to have a significant impact across various fields, such as education, healthcare, and customer service.

Difference between GPT-1, GPT-2, GPT-3 and GPT-4

| GPT-1 | GPT-2 | GPT-3 | GPT-4 | |

|---|---|---|---|---|

| Released date | 2018 | 2019 | 2020 | 2023 |

| Parameters (i.e., settings or features) | 117 million parameters | 1.5 billion parameters | 175 billion parameters | Estimated 100 trillion parameters |

| Amount of dataset trained on | Trained on a dataset of 600 billion words | Trained on a dataset of 450 billion words with improved performance on all tasks compared to GPT-1 | Trained on a dataset of 1.5 trillion words with improved performance on all tasks compared to GPT-2 | Trained on a dataset of 100 trillion words with improved performance on all tasks compared to GPT-3 |

| Capability | Able to generate text, translate languages, and answer questions | Able to generate more realistic and complex text | Able to generate even more realistic and complex text and to perform many tasks without any additional training | Able to generate even more realistic and complex text and to perform many tasks without any additional training. It can also process images and text. |

Note:

Some of the values or amount of the parameters and dataset mentioned are estimates because OpenAI does not give out the exact values of the parameters.